Data has become one of the most valuable assets for modern businesses. Companies that understand their data gain clarity, make better decisions, and create stronger strategies. A data analyst plays a central role in this process by collecting, cleaning, transforming, and visualizing data so managers and teams can act on insights instead of assumptions.

This blog covers the full data analyst workflow and the tools used at every step. It also explains how platforms like Power BI, Tableau, Excel, and Python help businesses interpret and visualize data more effectively.

Understanding the Data Analyst Workflow

A data analyst follows a structured process that ensures data is reliable and insights are meaningful. The main stages include:

- Data collection

- Data cleaning

- Data manipulation and transformation

- Data interpretation

- Data visualization

- Reporting and decision support

Each stage requires specific tools and techniques to maintain accuracy and efficiency.

Step 1. Data Collection

Data comes from various sources such as CRM systems, websites, apps, surveys, sales reports, marketing platforms, databases, and spreadsheets. Analysts gather raw data and prepare it for the next step.

Most common collection tools

Excel files

SQL databases

APIs

Cloud data warehouses

Google Sheets

Python is often used to automate data collection from APIs or large data systems, especially when the scale is too big for manual work.

Step 2. Data Cleaning

Data cleaning is the most important and time-consuming task for analysts. Clean data ensures that insights are accurate and trustworthy.

Cleaning tasks include

Fixing missing values

Correcting inconsistent formatting

Removing duplicate entries

Handling outliers

Standardizing columns and labels

Tools used for cleaning

Excel for smaller datasets

Python libraries like Pandas and NumPy for larger datasets

SQL for database-level cleanup

Python’s Pandas library is the industry standard because it can handle large volumes of data and automate repetitive tasks.

Step 3. Data Manipulation and Transformation

Once data is clean, analysts structure it in a way that supports analysis. This includes merging datasets, creating new calculated fields, filtering segments, and preparing final tables for visualization.

Key Python libraries

Pandas for shaping and restructuring data

NumPy for mathematical operations

OpenPyXL for Excel automation

Matplotlib and Seaborn for quick exploratory insights

Excel is also useful here for quick calculations, pivot tables, and manual checks.

Power BI and Tableau can connect directly to cleaned datasets and support modeling for complex dashboards.

Step 4. Data Interpretation

Data interpretation turns numbers into meaning. Analysts identify patterns, trends, risks, and opportunities. This step helps answer questions such as:

Which product is performing the best?

Where are we losing customers?

Which marketing channel generates the highest ROI?

What is the predicted growth for next quarter?

Python helps with deeper statistical analysis, forecasting, and machine learning models.

Excel supports quick summaries and descriptive insights.

Power BI and Tableau offer interactive ways to interpret data through structured dashboards.

Step 5. Data Visualization

Visualization is where insights become clear. A good chart or dashboard allows decision-makers to understand performance at a glance.

Common visualization platforms

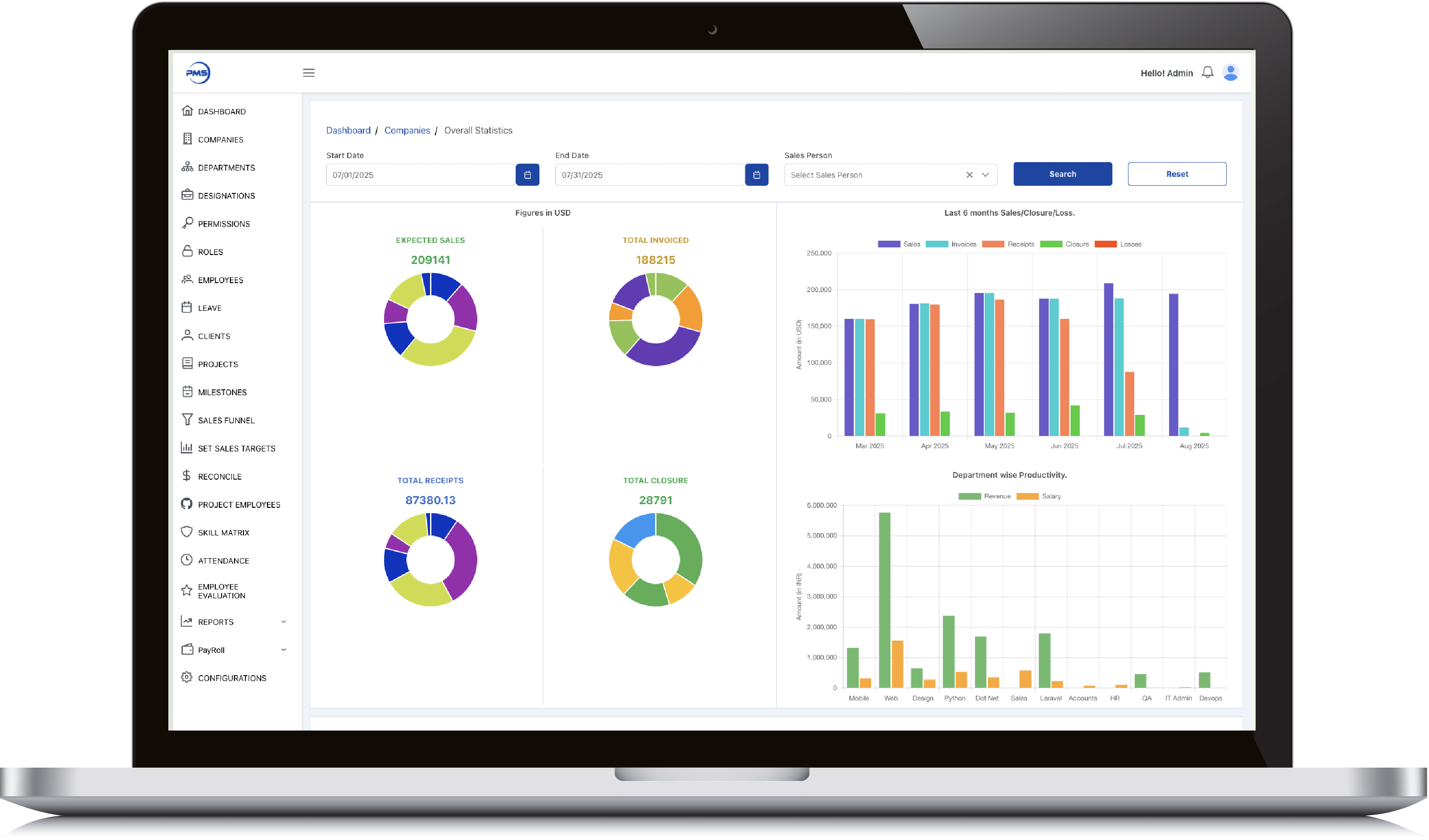

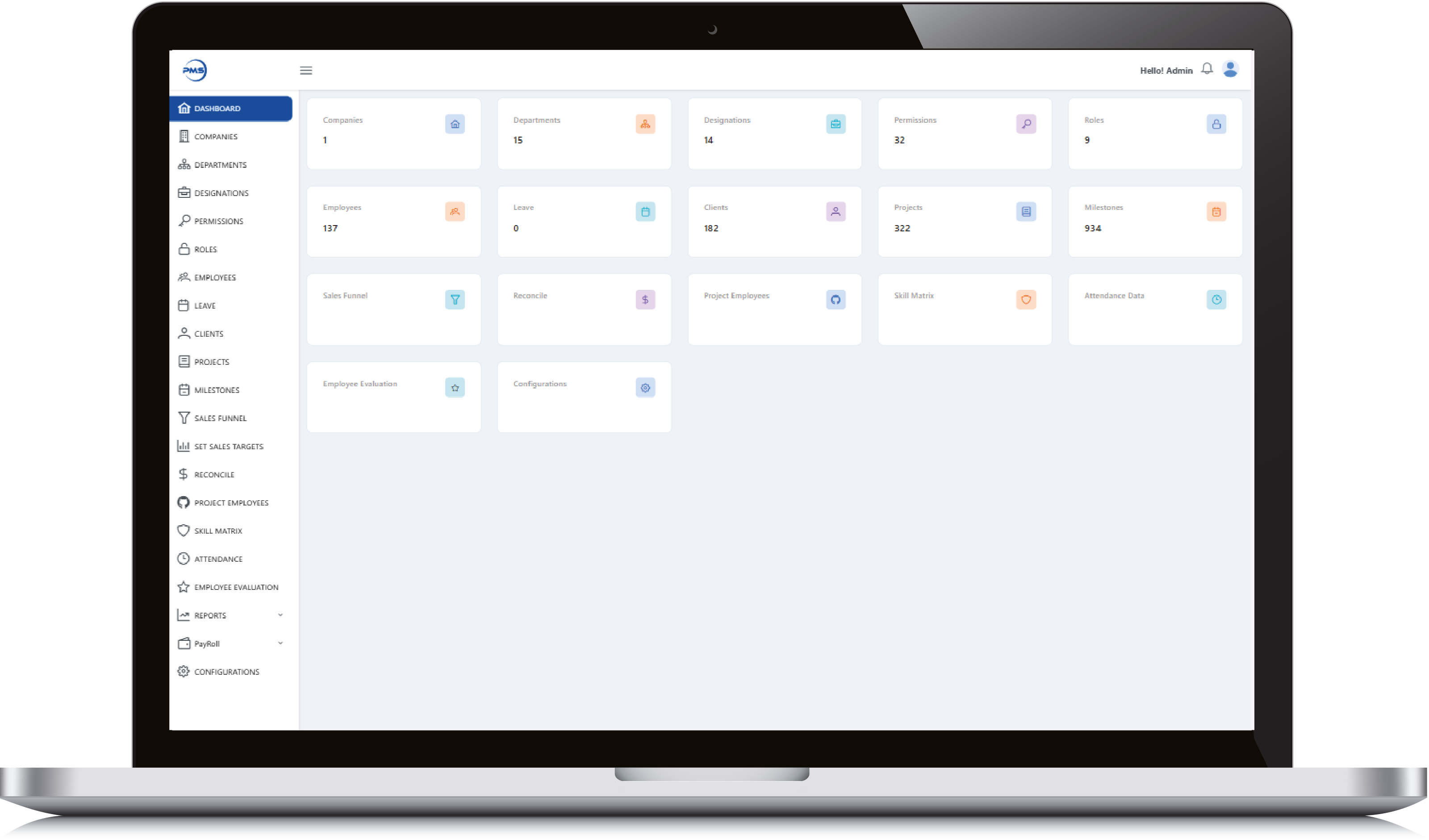

Power BI – best for business reporting, live dashboards, and cross-department analytics

Tableau – strong for deep visual storytelling and complex datasets

Excel – suitable for simple charts and reports

Python (Matplotlib, Plotly, Seaborn) – useful for custom charts, automation, and advanced visuals

Visualization transforms raw data into charts, heatmaps, KPIs, maps, funnels, and comparison graphs that reveal real business insights.

Step 6. Reporting and Decision Making

The final step is presenting insights in a format that leadership teams can act on. This can include dashboards, reports, presentations, and automated refresh systems.

Power BI and Tableau allow real-time dashboards that update automatically from connected data sources.

Excel is used for static reports that require manual review.

Python can automate recurring reports, notifications, and scheduled data pipelines.

The goal is simple. Turn data into decisions.

How BrainerHub Supports Data Analysis and Visualization

BrainerHub builds end-to-end data solutions that support this entire workflow. Our team works with Power BI, Tableau, Excel, Python, SQL, and cloud-based data systems to help businesses:

Set up clean and organized data pipelines

Automate cleaning and transformation processes

Build dashboards for sales, marketing, finance, supply chain and operations

Create reports that executives can rely on

Visualize complex data in simple, understandable formats

Create predictive models using Python and machine learning

We help companies move from scattered spreadsheets to structured, intelligent reporting systems.

Conclusion

A strong data analyst workflow brings clarity to a business. When data is cleaned, interpreted, and visualized properly, it becomes a powerful decision-making tool. Whether a company uses Power BI, Tableau, Excel or Python, the end goal is always the same: make decisions based on facts, not assumptions.

When the right tools and processes come together, data becomes one of the strongest assets a business can have.

Do you have a project in mind? Talk to the experts.

Get in Touch

We’d love to resolve your queries with personalized assistance.

Contact us

Our Office

D-101/102/501/601 Titanium Square Building, Near Thaltej Cross Road, Sarkhej - Gandhinagar Highway, Ahmedabad, Gujarat 380059